Reaction to the first self-driving car fatality.

The recent incident involving a traffic fatality from an Uber self-driving car made headlines around the world and reminded me of my blog from last year on the topic. I wrote that blog almost a year ago to the day. It was titled “Would you jump in front of a self-driving car?” It discussed the theoretical possibility of a self-driving car hitting a pedestrian. Unfortunately, we are now looking at the repercussions of an actual event of this nature. The question on the technology remains – “How safe do self-driving cars have to be?”

Source: Uber.com

Self-driving cars and other forms of automated transportation was a theme that was discussed in detail at the Abundance 360 conference that I recently attended. Self-driving or autonomous vehicles have the potential to disrupt a major part of the workforce. Given that approximately 8% of the North American workforce is employed either directly or indirectly in the transportation industry (long-haul truck driver, delivery driver, taxi driver etc.), the prospect of these jobs disappearing is worrisome for many.

Uber’s Chief Product Officer attended A360 and in his session he stated that Uber will have 24,000 self-driving cars on the road in 2019 in jurisdictions that allow it. It is foolish to think that these technologies are science fiction and won’t be broadly adopted anytime soon. Not to state the obvious, but 2019 is next year.

Source: Pexels.com

The next phase of Uber’s master plan is to develop and launch autonomous passenger drones by 2021. This type of drone is already testing in Dubai and parts of Africa, where the regulations for flight testing are more accommodating and they want to be first. Whether Uber’s ambitious plans are delayed because of the recent fatality remains to be seen.

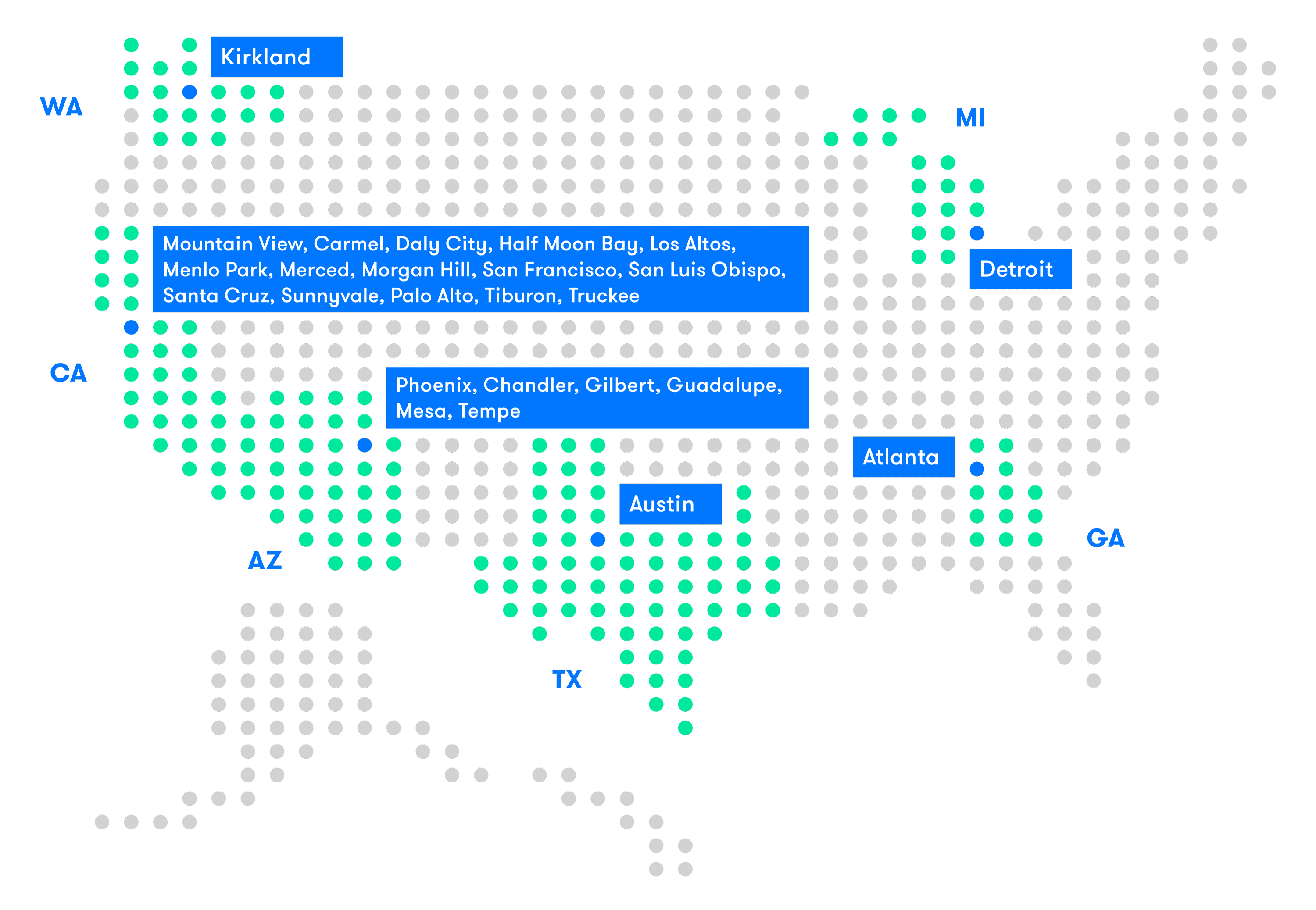

Not to be outdone, Google’s self-driving vehicle offshoot Waymo is launching self-driving cars in 5 cities with no back-up driver this year. To date, nearly every jurisdiction that has allowed self-driving car testing on the roads has required a back-up driver to be behind the wheel overseeing the operation at all times. Clearly Waymo is confident enough in their technology to launch live road testing and plans to soon scale to a full-service self-driving taxi service.

Google spin-off Waymo’s test cities. Source: Waymo.com/press

While the first traffic fatality involving a self-driving car is certainly a tragedy, it is also unrealistic to expect perfect technology involving high speed vehicles and potentially dangerous situations. Using airline travel as an example, if someone invited you to travel with them speeding through the air at 900km/h, ten kilometers above the surface of the earth in a sealed metal tube that is controlled by a computer, you might think twice about joining them. But if the same person invited you to fly to Las Vegas for the weekend you probably wouldn't think twice about taking the flight. Air travel is not an inherently safe activity, as any number of things can go wrong to cause a crash and still do on an infrequent basis, but the safety track record of the airline industry is remarkable. Being on the ground in a vehicle at somewhat lower speeds, but in a far more complicated environment involving pedestrians, other vehicles, cyclists and any number of other obstacles is no different.

Looking at the recent Uber accident, it should be noted that there are situations where the physical limitations of a vehicle traveling at speed can’t be overcome. For example, even the best performing vehicles take several seconds to slow from 100km/h to a full stop. If someone jumps in front of a car that is traveling at high speed, it doesn't matter how intelligent the onboard software is or how quickly it reacts to attempt to prevent a crash. If the pedestrian is too close, the vehicle simply can’t break the laws of physics and won’t be able to slow down or steer out of the way quickly enough. I have watched the video that was released by the Tempe Arizona police department showing the circumstances of the fatal crash. The investigation is still underway, and the police haven’t released their final findings however the pedestrian was crossing the street in the dark wearing a dark jacket and had no lights on her bike or person. The pedestrian comes into view at the last second before the frame stops and it seems unlikely that any human driver would have been able to react in time to prevent this accident. The video doesn’t show the actual collision – if you are interested you find it easily enough online. There is obviously room for improvement in the vehicle’s sensor and control technology (as there always will be) and the investigation may still find that this event could have been prevented. Perhaps the vehicle could have seen or anticipated the pedestrian’s actions with a different type of night vision sensor or radar technology that would have made it better than the human eye. However, it is impossible to foresee every possible road condition and obstacle that a self-driving or human driven vehicle may encounter when driving on public roads. No system will ever be perfect.

It should also be noted that the Uber vehicle involved in the crash had a person behind the wheel watching the road and she was unable to take over stop or steer the car out of the way quickly enough. However, the human driver in the vehicle whose job it is to take over in the event of a crash seems distracted in the moments leading up to it. It is likely that the vehicle’s systems reacted at some point before the collision and applied the brakes in an attempt to stop more quickly than the person behind the wheel did or could ever have and it still didn’t make a difference.

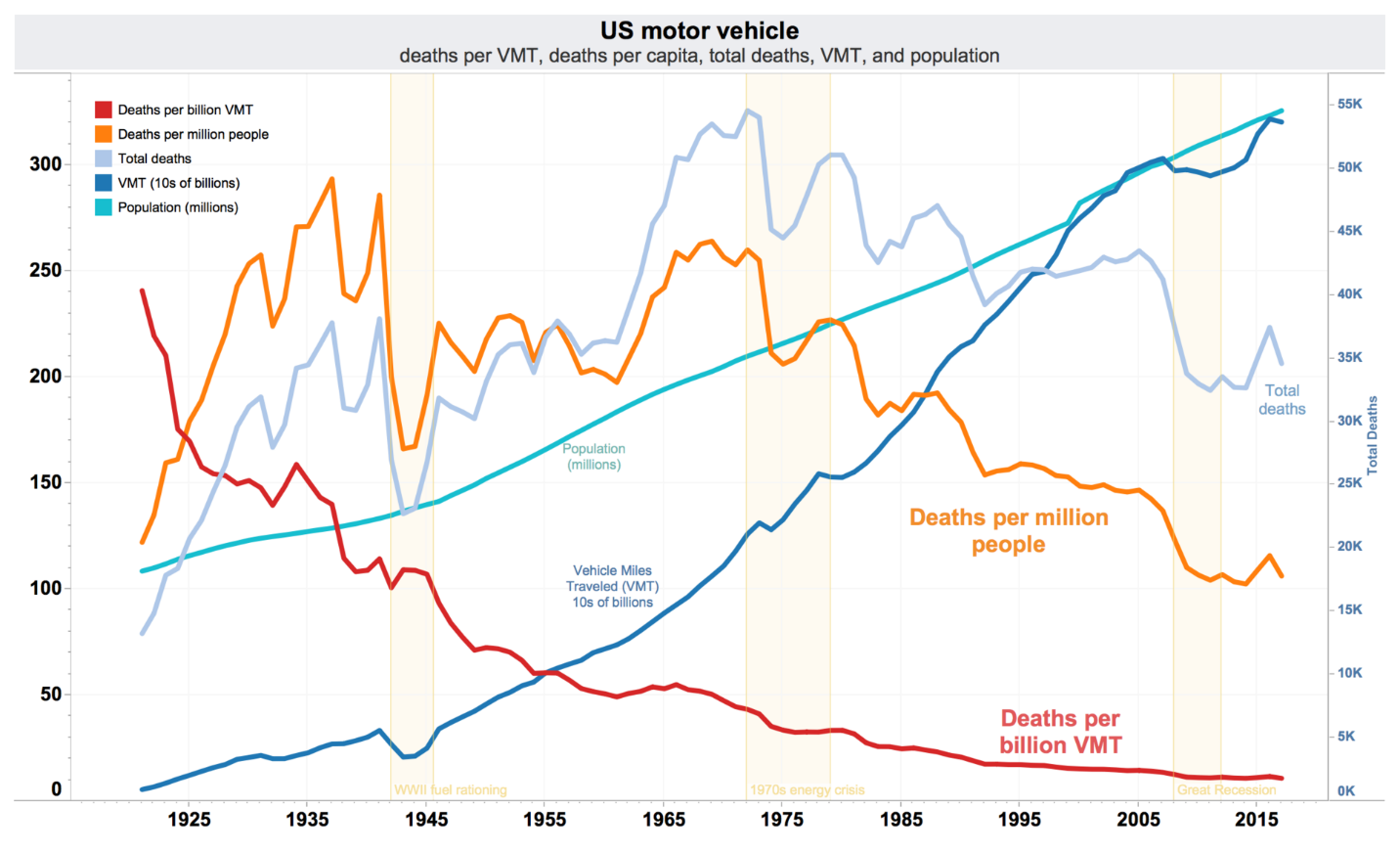

Source: NHSTA data/ Wikipedia.org

It is interesting to note the media reaction to this particular event as traffic fatalities involving human drivers occur on a regular basis and are seldom a footnote, even on the local news. This event involving a self-driving car fatality has made headlines around the world.

Self-driving cars will likely be held to a much higher standard than human drivers to gain acceptance and trust among the public. Being twice as safe or even ten times as safe may not seem like nearly enough. Human drivers are involved in approximately 5.4 million traffic accidents per year and of these roughly 36,000 traffic fatalities occur per year in the US alone. The simple math works out to roughly 100 traffic fatalities per day in the US with few, if any, making national headlines. Statistics in Canada aren’t as widely available as they are in the US but, based on roughly 1/10th the population and similar driving habits on average we would see approximately 3,600 traffic fatalities per year in Canada or roughly ten per day. However, it does appear that vehicles have improved over the years, as the absolute number of fatalities is generally falling even though the population and vehicle miles traveled is steadily increasing.

Just as we rarely hear of airline crashes involving major carriers and when we do it is a major news event, again I would suggest that the public wouldn’t tolerate more than a handful of accidents or fatalities per year involving self-driving cars. The engineers at Uber, Waymo, Tesla and other companies in this space certainly have their work cut out for them. But as we have seen with the safety record of the airline industry, in time, the self-driving car industry will surely succeed.

The opinions expressed in this report are the opinions of the author and readers should not assume they reflect the opinions or recommendations of Richardson GMP Limited or its affiliates.

Richardson GMP Limited, Member Canadian Investor Protection Fund.

Richardson is a trade-mark of James Richardson & Sons, Limited. GMP is a registered trade-mark of GMP Securities L.P. Both used under license by Richardson GMP Limited.