Apple recently pushed an update to the software for the iPhone that allows users to simply say ‘Hey Siri” to activate the iPhone’s built in virtual assistant ‘Siri’. The implication of this new ‘feature’ is that the phone’s microphone is always on and some part of the phone’s software is always listening for the owner’s voice to say the magic words ‘Hey Siri’ so that it can spring to life. I am somewhat apprehensive about having an internet connected device on my person that is always listening, but given that Apple is pushing this feature out to users, I figured that I might as well try it out. This new feature does raise a number of questions:

Does this open me up to a hacker listening to everything that goes on in my life?

How much of what I say is recorded?

What does Apple do with all of these snippets of my voice and my questions?

Is this service just a data mining system to learn my personal habits?

Not that I have anything to hide, it just feels a bit creepy that this electronic big brother (or big sister as the default is for Siri to have a female voice) is always on, always ready to respond to your commands. To note, the voice of Siri can be changed to speak in three accents for the English language: American, British or Australian with male and female versions of each. My kids find it humorous to toggle between the accents and get Siri to say silly things with each different voice. (According to my kids, Male Australian voice = Crocodile hunter = Crikey!)

In the previous version of the software, the user would have to hold the iPhone’s home button down for one second to activate Siri. Imagine how much time people will save by eliminating this button pushing nuisance and simply being able to ask Siri anything at any time.

So how useful is Siri?

I never really used the early iterations of Siri as it was generally a frustrating experience where the software wouldn’t understand my voice and even when it did, the answers would be something useless. When the new software is activated, Apple suggests a few test questions to ask to familiarise you with how to interact with Siri and what types of questions it (she/he?) can answer.

Me: “Hey Siri – What’s the weather tomorrow?”

Siri responded: “The weather tomorrow will be pretty cold. Expect temperatures to reach -3C”

While this isn’t an inaccurate response, it is apparent that Siri is one of those products that was ‘Designed by Apple in California’. While -3C might qualify as ‘pretty cold’ for someone from California, we had just experienced nearly two straight weeks of temperatures in the -20C range, so -3C felt downright balmy from a Calgarian’s perspective. Perhaps Apple should hire some programmers from different climates to adjust the context of the answers based on the user’s location.

Testing it on another occasion when the temperatures were dipping below -25C the answer was equally humorous.

Testing out another query –

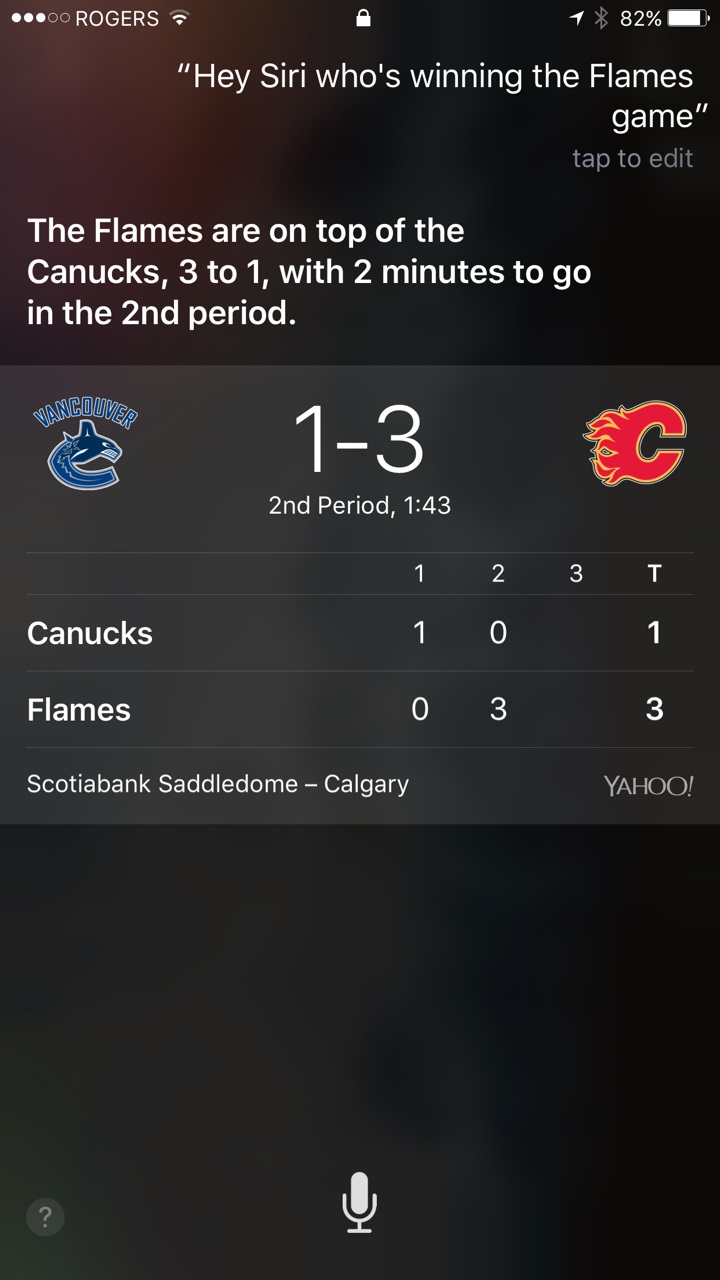

Me: “Hey Siri - who’s winning Flames game”

Siri: “The Flames are on top of the Canucks, 3 to 1, with 2 minutes to go in the 2nd period”

This was a pretty good response, although ‘on top of the Canucks’ seemed a bit of an awkward way to describe which team was winning. For some reason, it conjured the image of an old school hockey brawl where the Flames were literally on top of the Canucks.

Thinking that I might check out the new Star Wars movie I asked Siri:

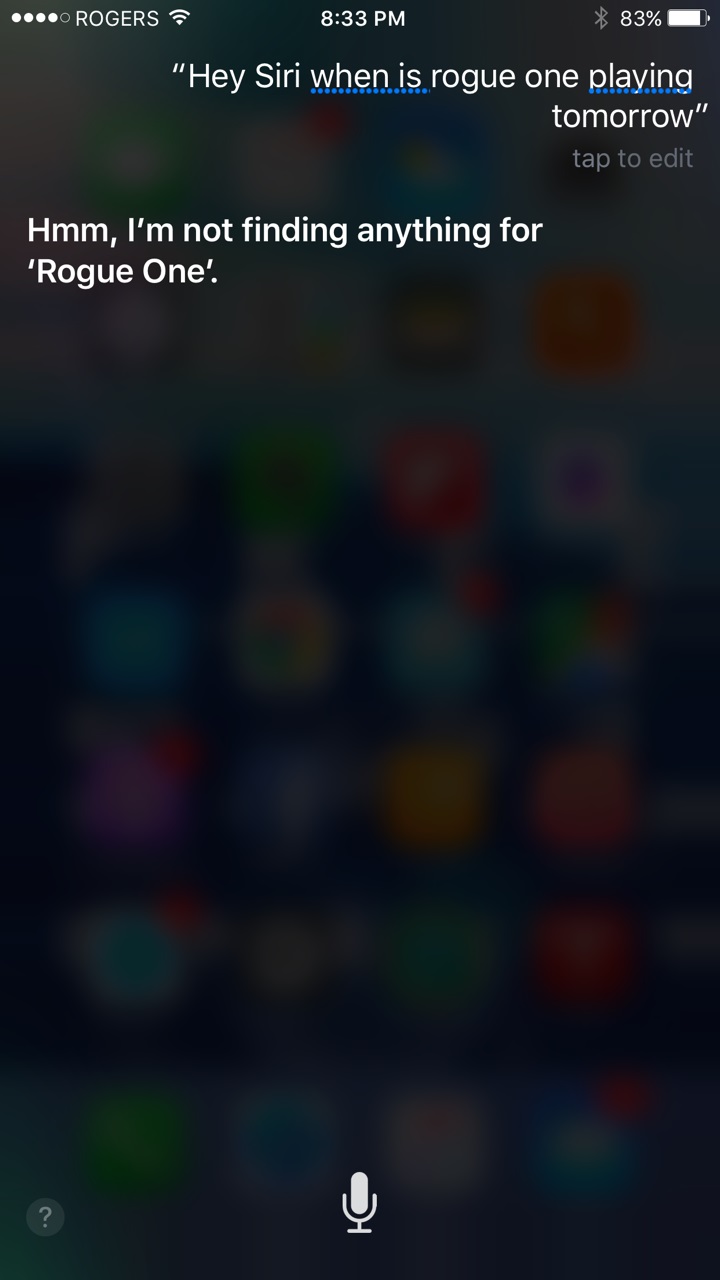

Me: ‘’Hey Siri – when is Rogue One playing tomorrow”

Siri: “Hmm, I’m not finding anything for Rogue One”

Really Siri? Have your programmers been living under a rock for the past 3 months and not heard that there is a new Star Wars movie out? Just to benchmark the result, I typed the first 3 letters ‘Rog” into Google and Google happily completed the rest of my search without even having to type anything else into the search bar. The show times at the closest cinema to my house in the top 3 search results. Compared to Google’s basic search, it seems that Siri’s ‘artificial intelligence’ still has a way to go.

If my latest ‘artificial intelligence’ helper can’t find a movie listing for the latest Hollywood blockbuster, how do we expect a self-driving car to make a life or death decision in a split second? One of the debates in the world of self-driving cars, is how does a car or the ‘intelligence’ programmed into a car decide in a crash situation?

The discussion often boils down to simple scenarios that resemble the ‘trolley problem’ of ethics. The situation that is most often described in the ‘trolley problem’ goes something like: “If a trolley is speeding out of control towards a group of people standing on the tracks, and you are a bystander who can pull a switch on the tracks that the trolley onto a side track where there is a lone worker on the tracks, what should you do?” In this scenario, the choice is simplified down to ‘do nothing and risk killing many’ or ‘act and risk killing an individual but save the lives of a group’. When the situation is described with few details, most people will answer that many lives are worth more than one so they would act and switch the tracks. However, it gets murkier when you add different details and scenarios. What if there it was a baby on the side track? What if the worker was a young father or mother and the group was elderly people that had already lived full lives? What if it was a group of criminals in the way and the individual was just an innocent rail worker? How do you know any of this? Is there a right answer? I doubt that in a group of people that a consensus could ever be reached on a more complex scenario on what is the right action to take.

Another debate is who becomes liable in the instance where a self-driving car has to decide between one group and another? Does the car favour the owner/occupants of the vehicle? What if there is one person in the car and the brakes fail while headed towards a group of people crossing the street? Would the owner’s family sue the car manufacturer for programming the car’s AI system to act in favour of the pedestrians on the street as opposed to saving the occupants of the vehicle? This blog seems to be bringing up more questions than answers, but it is a topic worth discussing as an accepted framework for what is the right response to these situations will need to be developed as self-driving cars go from research and development projects to reality.

The MIT media lab has created an interesting website that allows people to test their moral compass in a variety of these scenarios. It is worth trying out to see where your own moral sensibilities land. The website has you decide whether the car should save the occupant or pedestrians, young vs old, athletic vs overweight, dogs/cats vs people etc. It starts off with simple scenarios but as you go through a dozen, the situations get increasingly complicated as they likely would in reality.

Visit: http://moralmachine.mit.edu/

Act and save pedestrians at the expense of the occupants or do nothing and hit the pedestrians?

Act and hit the dogs or don’t act and sacrifice the occupants?

Act and hit a group that includes a criminal/bank robber or don’t act and hit a group that includes a medical doctor?

How would you decide in these scenarios?

The opinions expressed in this report are the opinions of the author and readers should not assume they reflect the opinions or recommendations of Richardson GMP Limited or its affiliates. Assumptions, opinion and estimates constitute the author’s judgement as of the date of this material and are subject to change without notice. We do no warrant the completeness or accuracy of this material, and it should not be relied upon as such. Before acting on any recommendation, you should consider whether it is suitable for your particular circumstance and, if necessary, seek professional advice. Past performance is not indicative of future results. The comments contained herein are general in nature and are not intended to be, nor should be construed to be, legal or tax advice to any particular individual. Accordingly, individuals should consult their own legal or tax advisors for advice with respect to the tax consequences to them, having regard for their own particular circumstances. Richardson GMP Limited is a member of Canadian Investor Protection Fund. Richardson is a trademark of James Richardson & Sons, Limited. GMP is a registered trade-mark of GMP Securities L.P. both used under license by Richardson GMP Limited.